Release date: 2017-02-22

In the past two years, deep learning has achieved an unimaginable breakthrough in the medical field, whether in speech recognition, image recognition or text comprehension.

In the field of speech recognition, a collaboration between Mayo Clinic and Israeli speech analysis company Beyond Verbal found that 13 speech features are associated with coronary heart disease, and one of the speech features is strongly associated with coronary heart disease.

· In the field of natural language processing, IBM's Watson robot can read 3469 medical monographs, 248,000 papers, 69 treatment plans, 61540 experimental data, 106,000 clinical reports, and patient indicators based on doctor input in 17 seconds. The information ultimately leads to a preferred personalized treatment plan.

At the same time, along with the technological advancement of computer vision, deep learning technology has made major breakthroughs in the field of medical imaging. In addition to teaching machines how to "understand" and "read and understand", artificial intelligence can teach machines to "understand" our The world, and on this basis, assist doctors in diagnosing diseases. More than 90% of the medical data comes from medical imaging. The medical imaging field has a huge amount of data for deep learning. There is also a need to improve the efficiency of doctors' "watching" diagnosis through deep learning. Therefore, in the field of medical imaging, deep learning may be the first to enter the clinical stage.

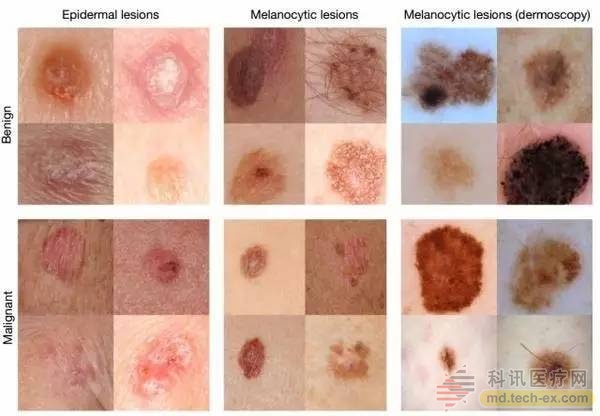

Under the training of 130,000 images, the accuracy of deep learning to identify skin cancer is comparable to that of human doctors.

Skin cancer is the most common malignant tumor in humans. Although they appear on the surface of the skin, people often mistake it for the "sputum" of natural growth, delaying the disease, and it is too late after diagnosis. Singularity cake still remembers that in the movie "If You Are the One 2", Sun Honglei played a black scorpion that grew up from a young age and turned into a malignant melanoma. In the end, he chose to jump into the sea to commit suicide.

The 5-year survival rate of early detection of melanoma is around 97%. If the 5-year survival rate is reduced by 14% in the late stage, early detection of skin cancer may have a huge impact on the outcome. Screening for skin cancer is primarily through visual diagnosis. Clinical screening is usually performed first, followed by dermascopic analysis, biopsy, and histopathological studies. So is there a simpler way to screen for skin cancer? Or can you use a smartphone to screen for skin cancer?

With deep convolutional neural network (CNN) technology, researchers at Stanford University took this idea a step further: the researchers used the 130,000 skin lesion images after training on Google's algorithm for recognizing cats and dogs. To identify skin cancer. The system was tested in two rounds with 21 dermatologists: keratinocyte and benign seborrheic keratosis, as well as malignant melanoma and common sputum. The first round represents the most common cancer identification and the second round represents the most deadly skin cancer identification. The depth convolutional neural network performed on both tasks to the level of all the experts tested, proving that the level of skin cancer identification of this artificial intelligence system is comparable to that of dermatologists. The research was published in the January 2017 issue of Nature. ã€1】

Researchers don't stop there, they want to apply the system on their smartphones as well. Andre Esteva, a graduate student at Thrun Labs, one of the main authors of the paper, said: "Now everyone has a supercomputer in their pockets with a lot of sensors, including camera passes. If we use it to screen the skin What happens to cancer? Or screening for other diseases?"

Google uses deep learning to diagnose diabetic retinopathy

Diabetic retinopathy is a cause of blindness that is now gaining more and more attention. Currently, 415 million diabetic patients worldwide are at risk of retinopathy. If found to be timely, the disease can be cured, but if it is not diagnosed in time, it may lead to irreversible blindness.

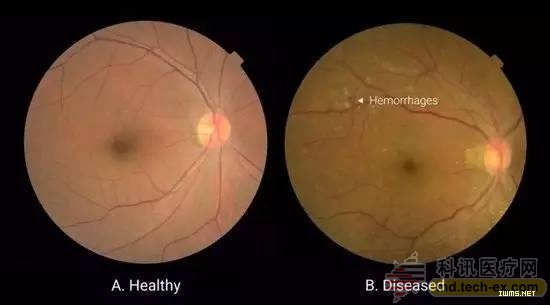

An example of retinal basal angiography in patients with diabetic retinopathy. The left picture was taken from a diabetic patient with normal eyes; the right picture was taken from a diabetic patient with retinopathy, which showed bleeding in the fundus of the patient.

In general, doctors should use the fundus angiography image of diabetic patients to determine whether the retina has lesions, and to determine the severity through fundus lesions, such as whether there is microaneurysm, fundus hemorrhage, hard exudation, etc., mainly refer to bleeding, Conditions such as fluid seepage. This puts high demands on the doctor's personal level. If there is no clinical experience, it is easy to be misdiagnosed or missed.

In 2016, Google researcher Varun Gulshan and his colleagues used deep learning to create an algorithm that can detect diabetic retinopathy and macular edema. It can determine whether a patient's retina has a lesion through an eye scan image. Diagnostics under limited medical conditions. The study was published in the November 30, 2016 issue of the Journal of the American Medical Association. ã€2】

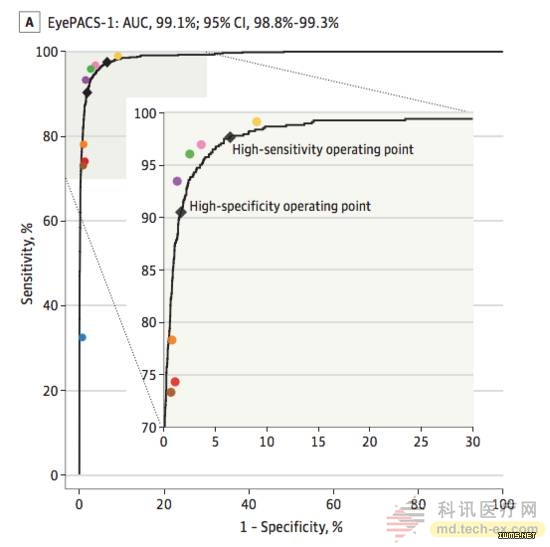

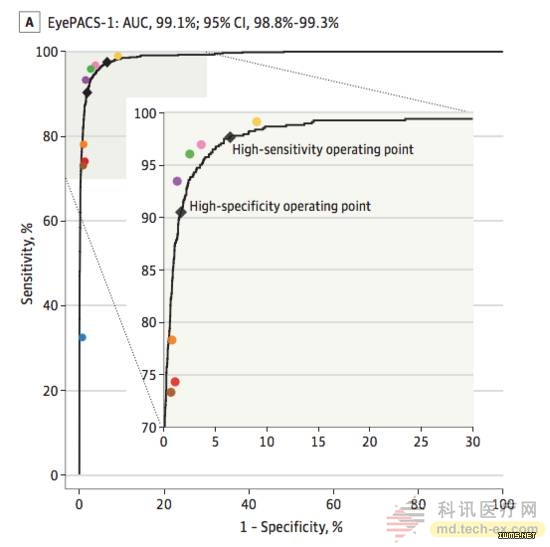

The black curve represents the performance of the algorithm, and the colored dots represent the clinical diagnosis of eight ophthalmologists for diabetic retinopathy (mild or severe diabetic retinopathy, or macular edema caused by diabetes).

To train the algorithm, the researchers collected 128,175 annotated images from the EyePACs database, each of which recorded the results of 3-7 ophthalmologists. There are a total of 54 ophthalmologists working with the team. To verify the accuracy of the algorithm, the researchers used two separate data sets for verification, one for the 9963 images and one for the 1748 images. Compared with the diagnosis results of 8 ophthalmologists, Google's algorithm even surpasses that of human physicians: the algorithm obtains an F score of 0.95 (in combination with sensitivity and specificity, taking max = 1), compared to the median of eight ophthalmologists. 0.91 points.

The images used in this eye shadow are 2D images, and Google Deepmind has begun to apply more accurate and comprehensive 3D image technology (optical coherence tomography (OCT)) to deep learning.

Chinese scientists use deep learning to screen congenital cataracts

Congenital cataract is a rare disease that combines the characteristics of chronic and acute diseases. It causes blindness and visual impairment, which usually occurs before and after birth, or during childhood, and the incidence rate in China is 0.05%.

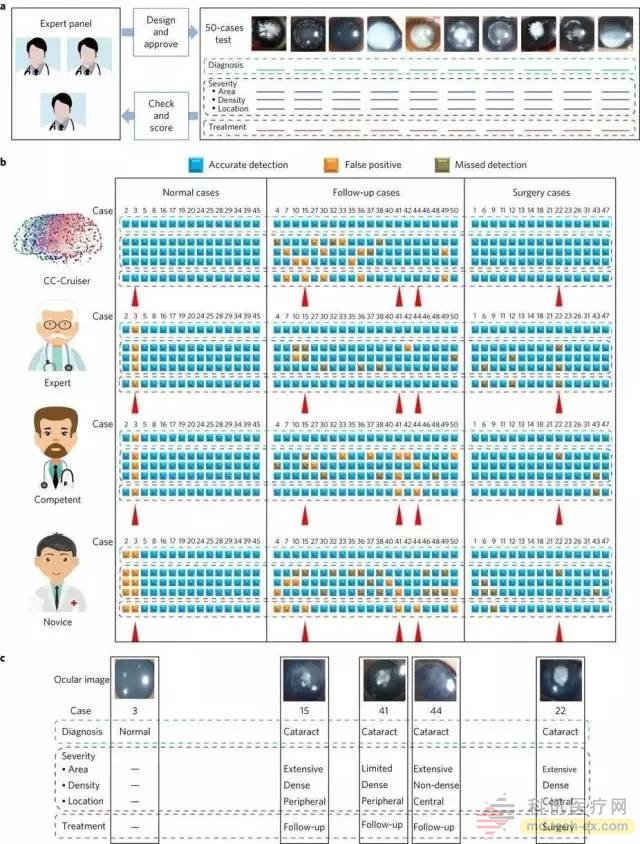

Inspired by the paper published by Google DeepMind in 2015, Lin Haotian, a post-80 ophthalmologist at the Zhongshan Ophthalmology Center of Sun Yat-Sen University, China, and his colleagues came up with the idea of ​​creating an artificial intelligence platform to mine their clinical data on congenital cataracts and then to screen them. And the purpose of aiding diagnosis. Together with Professor Xi Xiyang from Xi'an University of Electronic Science and Technology, I used the champion model of ILSVRC 2014 (ImageNet Large Scale Visual Recognition Challenge of 2014) to establish a deep learning model for identifying congenital cataracts. This model is considered to be dominant in the field of image recognition and can be used. Training and classification), named CC-Cruiser. The results of this research were published in the journal Nature Biomedical Engineering on January 30, 2017 [3].

Trained CC-Cruiser's photo collection, the researchers used a partial picture of a routine check from the Chinese Ministry of Health's Children's Cataract Program (CCPMOH), including 410 images of children with congenital cataracts of varying severity, 476 normal children Eye image. All images were independently classified and described by two experienced ophthalmologists, and the third ophthalmologist advised on disagreements. The three human physicians have not been exposed to CC-Cruiser.

The picture shows the comparison test between CC-Cruiser and human physician.

The researchers tested the performance of CC-Cruiser five times and the results were excellent. In a comparison of 50 images with human ophthalmologists, CC-Cruiser identified all congenital cataract patients. The three ophthalmologists made mistakes in the third picture - mistakenly diagnosed the high-light area of ​​the picture as a congenital cataract. CC-Cruiser performed well in risk assessment and decision-making, and gave correct treatment recommendations to all patients who needed surgery. Therefore, the researchers believe that CC-Cruiser can be called a "qualified ophthalmologist."

Atrerys becomes the first FDA-approved deep learning clinical application platform

4D cardiac MRI

In 2017, GE Health teamed up with San Francisco startup Arterys to overturn existing cardiovascular magnetic resonance imaging (commonly known as cardiac MRI), introducing 3D MRI into a new time dimension, extending its MRI to 4D, not only showing the heart structure in all directions. It also shows the speed, direction and flow of blood flow.

At the same time, Arterys also launched the Arterys Cardio DL application based on cloud computing and deep learning technology, which is the first FDA-approved deep learning application, which means deep learning and cloud technology will be further deployed in daily medical services. .

Arterys Cardio DL can be used to treat a variety of cardiovascular diseases, including congenital heart disease, aortic or valvular heart disease. It can automatically collect data of the inner and outer contours of the ventricle, and provide accurate calculation of ventricular function, which is short in time and high in precision. The analysis of an image can be completed in 10 seconds, much faster than the clinician. Therefore, it is called the artificial intelligence medical image analysis system based on deep learning. It has carried out data verification of thousands of heart cases. The experiment confirmed that the results of the algorithm and the results of the analysis of experienced clinicians are Parallel.

As a GE Health Partner, the functionality of Arterys Cardio DL will be integrated into GE's innovative imaging technology ViosWorks, which presents the heart in seven dimensions: three spatial dimensions, one time dimension and three speed dimensions.

Admittedly, deep learning can help doctors to “see the film†more efficiently and accurately, but there are still many obstacles and defects in the field of medical imaging.

First of all, it is the problem of the amount of data. Training deep learning requires high-quality data and a large amount of data. One of the reasons why Stanford University researchers developed algorithms to diagnose skin cancer so accurately is that they use a training data set that is more than any similar method previously published. It is about 100 times larger. Because Lin Haotian and his colleagues have a small training atlas, some readers have questioned whether they have obtained such high accuracy after fitting. The lack of data is a major obstacle to the development of deep learning. Medical data is divided into different hospitals and departments, and it is difficult to exert real power. In addition, the dimensions of the data are still a big issue. At present, deep learning is mainly used for 2D images, 3D images, and 4D images.

Secondly, deep learning is a "black box" . Most researchers have difficulty accessing the lowest-level algorithm structure, just feeding the data to deep learning. It is impossible and unnecessary to explain how "deep learning" is judged. Therefore, this "black box" has the problem of deception, which means that it is easy to mislead deep learning by adding small changes to the input data.

In the above figure, all the graphs in the left column are transformed into the graphs in the right column. For us humans, there is almost no change in the picture before and after the transformation. The deep learning model for the left column image judges the right column image. This shows that there are many differences between humans and deep learning models.

Although deep learning is still in the stage of “assisted diagnosisâ€, the real decision-making power is still in the hands of doctors. However, Tesla's autonomous driving has been fatal, and deep learning is difficult to achieve 100% accuracy in medical imaging. Once the doctor has listened to the advice of deep learning, it eventually leads to misdiagnosis or missed diagnosis. Is it really a doctor or a machine? Deep learning brings to the medical field not only technical issues, but also humanistic concerns. The interaction between the doctor and the patient will be the last bastion of human wisdom.

Source: Singularity Network

China Anti-Aging Suppliers

Here you can find the related products in Anti-Aging, we are professional manufacturer of Anti-Aging. We focused on international export product development, production and sales. We have improved quality control processes of Anti-Aging to ensure each export qualified product.

If you want to know more about the products in Anti-Aging, please click the Product details to view parameters, models, pictures, prices and Other information about Anti-Aging.

Whatever you are a group or individual, we will do our best to provide you with accurate and comprehensive message about Anti-Aging!

Here you can find the related products in Anti-Aging, we are professional manufacturer of Anti-Aging. We focused on international export product development, production and sales.

Acetyl Hexapeptide-8,achirellin,polypeptide, Anti-Aging,peptide

Xi'an Gawen Biotechnology Co., Ltd , https://www.ahualynbios.com